Going Nuclear: Modeling Threats to Distributed Systems

Regional Infrastructure Failures

Suppose the internet backbone providing service to our datacenter craps out. What happens if a hurricane comes through and floods the datacenter? What happens if a trigger-happy organization nukes the general area? We need to assume our datacenter will simply cease to exist one day without warning. This is conceptually easy enough to solve for — add more datacenters. If we need more datacenters, we therefore need datacenters spread far enough apart that whatever took out one datacenter won’t take out another.

Determining the best place for a datacenter is not an easy task. There are lots of things to take into consideration. A short list might look something like this:

- Year-round weather conditions

- Availability to the internet backbones

- Geopolitical stability of the region

- Local economic stability affecting cost of operations

- Relative distance to other datacenters

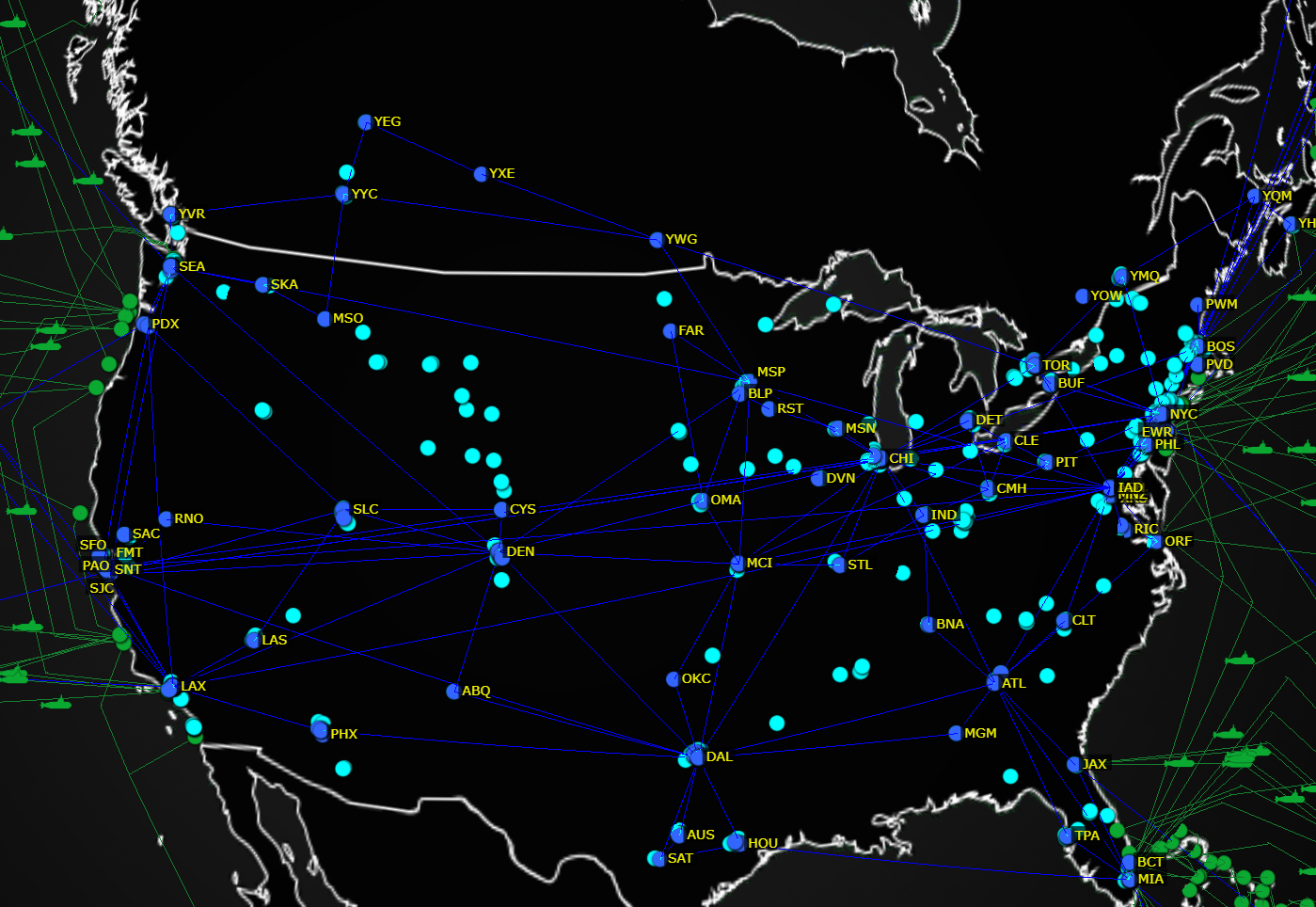

A few of these are easy enough to reconcile. An example map of the internet shows that there are plenty of hubs where we could stick a datacenter.

We could overlay such maps, find cities where there’s usually nice weather, investigate the various required stability (geopolitical/economic/etc.), and pick a bunch of candidates.

Geopolitical stability is a tricky thing to measure. For instance, Canada is stable, and predictably so for the foreseeable future. It’s highly unlikely the government would boot us out of the country or destroy our datacenter. However, if nuclear war struck, it might not be wise to build a datacenter near Ottawa because the city would be a prime target for attack.

Then again, winter storms in Ottawa suck so I wouldn’t want to build a datacenter there anyway.

Once we have our candidates we need to consider the desired distribution throughout the world. Since we’ve got agents all around world, we need a pretty even distribution of these datacenters. It seems logical that we’d want agents to check in to the nearest datacenter, but in reality we don’t necessarily care where the agent checks in. What we care about is that if a datacenter is taken out of commission, no others are also taken out, and that the agents can fail over to other datacenters. What this does mean though is that if an agent checks into one datacenter then the submitted data needs to be replicated immediately to all the other datacenters before it can be considered committed.

Actually, it doesn’t require immediate replication to all datacenters at once; it might just need replication to a quorum of datacenters before the data is considered committed.

Another thing to consider is the total number of datacenters required. If one goes down the rest have to pick up the slack. That means each datacenter cannot be running at full capacity. For the sake of argument lets say we choose to build 16 datacenters. I’ve picked this number because that’s the number of Microsoft Azure datacenters worldwide. They’ve already done their due-diligence picking good locations (and I already have a pretty map of it).

Edit: 66+ regions with data centers now.

Assuming a roughly even distribution of load per datacenter, that’s approximately 312,500,000 check-ins a day per datacenter. Assuming total nuclear war (that’s a phrase I never thought I’d use) and we lose all but one datacenter, that means that one datacenter needs to take on 15 times the load, which is 4.7 billion check-ins a day.

Practically speaking if 15 datacenters went offline suddenly I suspect quite a few agents would also go offline, so the total number of check-ins would decrease considerably.

What does that mean for the size of a single datacenter then? We haven’t really looked at that yet. If we based our assumptions on the diagram at the beginning we could conceivably have 1-4 servers. One for each element, or one for all elements. It would be really nice if it took more than a single server going down to take out a datacenter, so we need at least a set of servers.

Let’s assume we’re working with commodity hardware, and each web server can handle an arbitrary load of 5000 requests a second. Now, since our agents have a fixed schedule of checking in every minute, we can easily figure out there would be around 217,000 requests a minute or 3616 a second, with a compensation of some sort for time skew of each agent. A single server could handle the load fairly easily at 72% capacity, and doubling the server count for redundancy means we’re looking at only ~36% capacity (admittedly, there’s a bit more involved so its not simply half, but we’re keeping the math simple).

That’s actually surprising to me. I assumed we’d need a few servers to handle the load. Of course, this isn’t taking into account the overhead of persisting and replicating the data across datacenters.

But then we’ve got the analysis side to consider. We don’t know how many people want to review the data, and report generation on millions of data points isn’t computationally cheap. For now we can assume we only want to report on significant changes, so the computational overhead probably isn’t a lot. We can dedicate one server to that.

So lets assume each datacenter looks the same with the following servers:

- 2 agent check-in web servers

- 2 database servers

- 1 compute server

Our connection to the internet should be redundant as well.

Therefore overall we’ve got 32 servers for agents checking in, 32 database servers, and 16 compute servers throughout the world, communicating across 32 internet backbones. In contrast, Microsoft has at least a million servers worldwide in all of it’s datacenters for all of its services, and Netflix uses 500-1000 servers just for it’s front-end services.

Of course, we’re forgetting the total nuclear war scenario again.

Our datacenters currently have a maximum load of 10,000 requests a second, so that means we could take on the load of a smidge more than one other datacenter. That’s actually pretty good for most scenarios, but not so good for ours because we still need 8 times the capacity in a single datacenter for our worst case scenario. If we keep the math simple and just multiply the current number of servers by 8, we get 640 servers worldwide. It actually might be lower because we probably wouldn’t need that many database and compute servers in one location. Additionally we don’t need all those servers running at once. We only need them when another datacenter goes offline.

I think we’ve covered off enough for now to keep our servers online and our data available in case of catastrophe. What about risk to the agents?